Apache Spark is a fast cluster computing framework which is used for processing, querying and analyzing Big data. Being based on In-memory computation, it has an advantage over several other big data Frameworks.

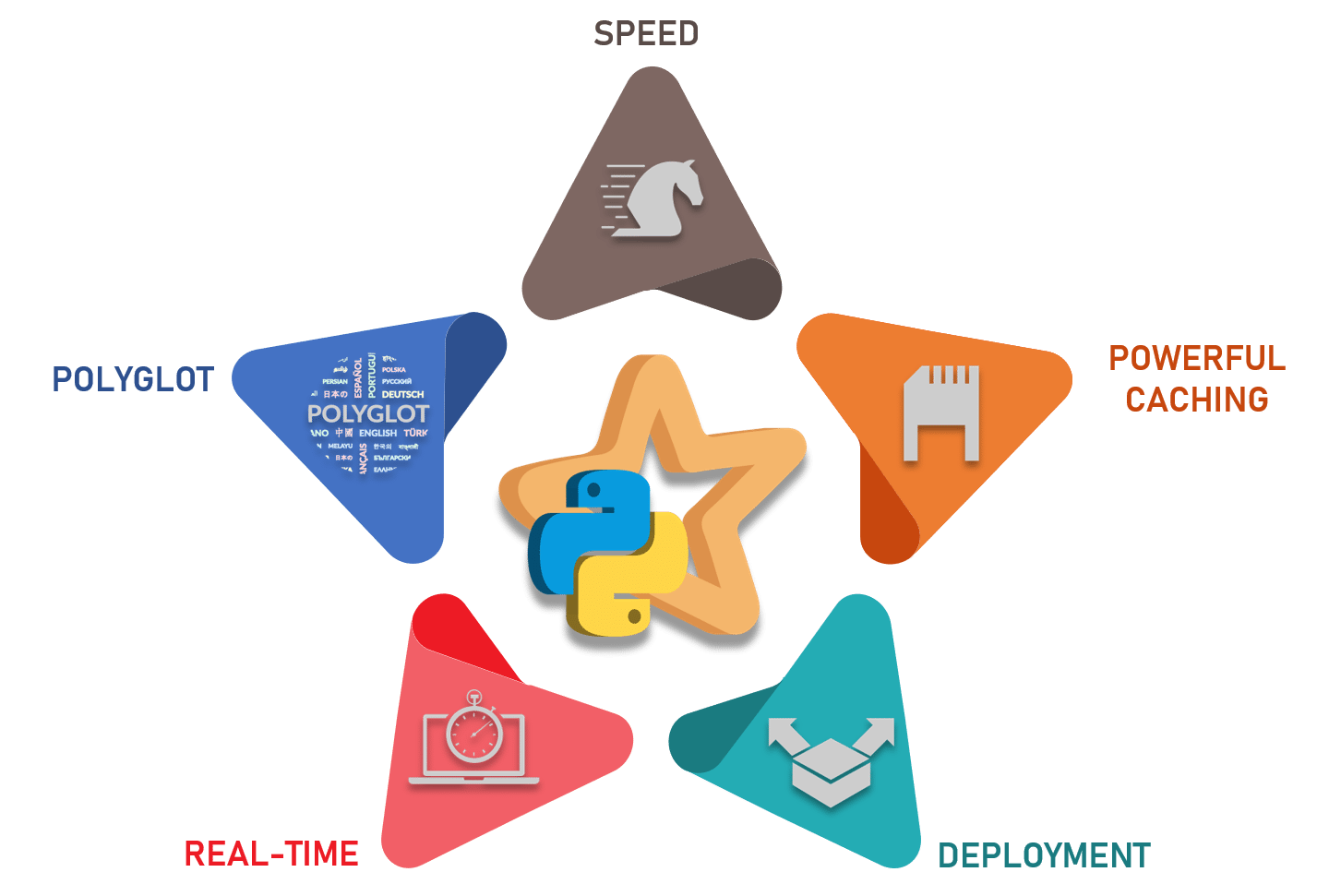

Originally written in Scala Programming Language, the open source community has developed an amazing tool to support Python for Apache Spark. PySpark helps data scientists interface with RDDs in Apache Spark and Python through its library Py4j. There are many features that make PySpark a better framework than others:

- Speed: It is 100x faster than traditional large-scale data processing frameworks

- Powerful Caching: Simple programming layer provides powerful caching and disk persistence capabilities

- Deployment: Can be deployed through Mesos, Hadoop via Yarn, or Spark’s own cluster manager

- Real Time: Real-time computation & low latency because of in-memory computation

- Polyglot: Supports programming in Scala, Java, Python and R

Why go for Python?

Easy to Learn: For programmers Python is comparatively easier to learn

because of its syntax and standard libraries. Moreover, it’s a

dynamically typed language, which means RDDs can hold objects of

multiple types.

A vast set of Libraries: Scala does not have sufficient data science tools and libraries like Python for machine learning and natural language processing. Moreover, Scala lacks good visualization and local data transformations.

A vast set of Libraries: Scala does not have sufficient data science tools and libraries like Python for machine learning and natural language processing. Moreover, Scala lacks good visualization and local data transformations.

Huge Community

Support: Python has a global community with millions of developers that

interact online and offline in thousands of virtual and physical

locations.

No comments:

Post a Comment